Text2Chart31 Dataset

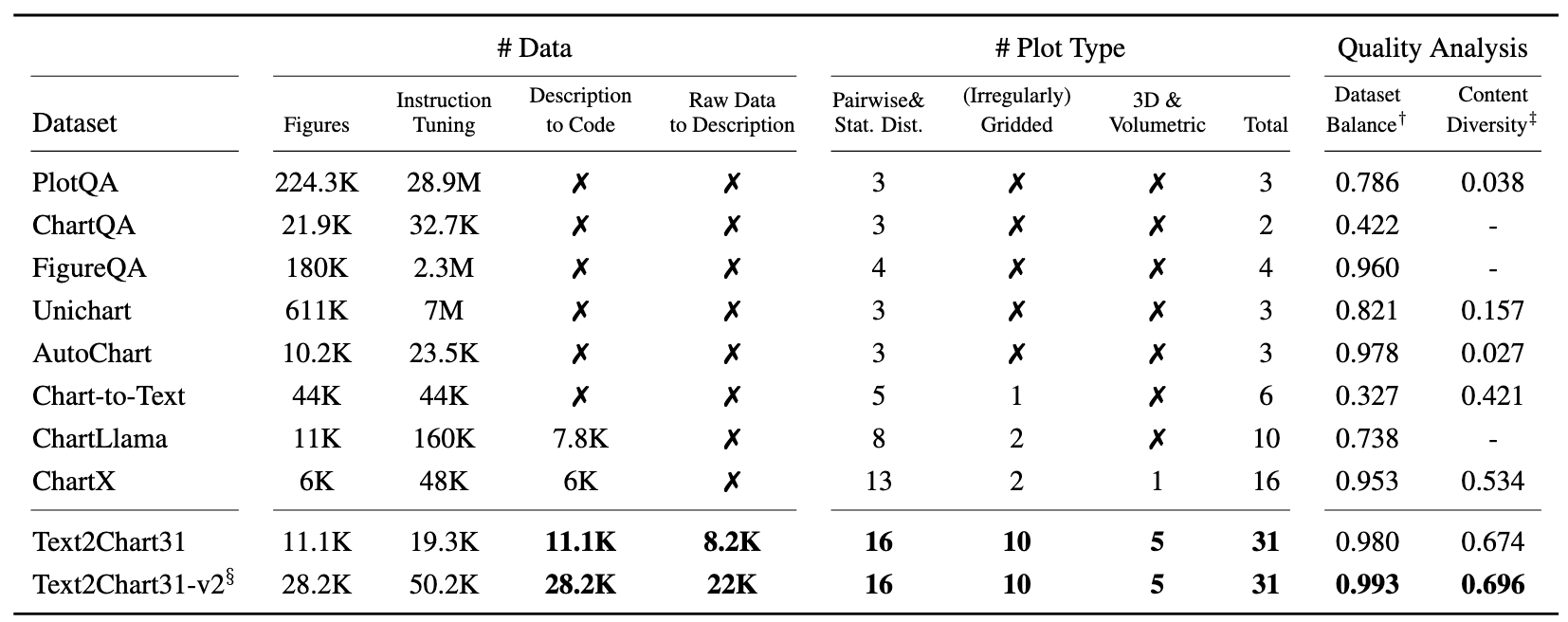

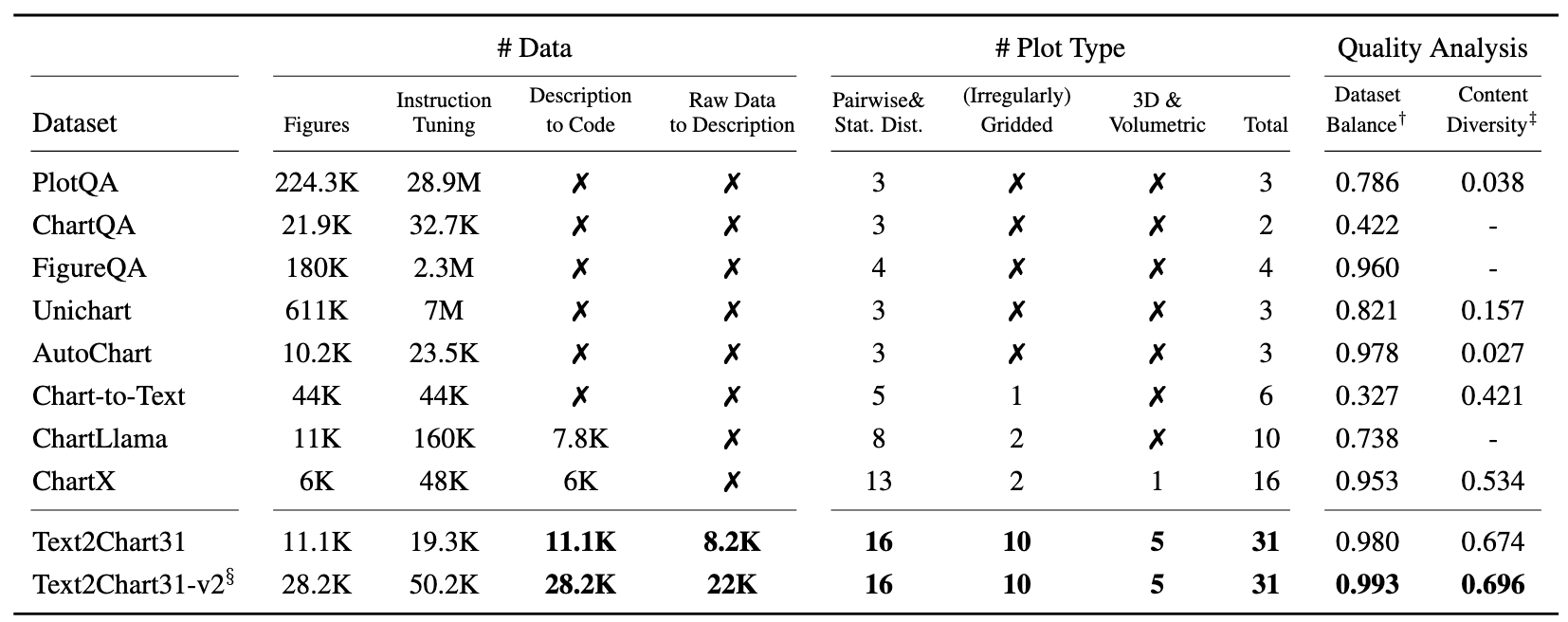

We develop a hierarchical plot generation pipeline leveraging GPT-3.5-turbo and GPT-4. Our newly contributed Text2Chart31 dataset supports 31 plot types based on Matplotlib with 11.1K data points. We outline its key characteristics in Table 1, comparing it with existing datasets in the data visualization domain.

The Text2Chart31 dataset D consists of 11,128 data points, each of which contains a tuple of (x, c, d, r, y): a textual plot description (x), its corresponding code (c), and the resulting plots (y).

For 8,166 data points, we additionally include a raw data table (d) and intermediate reasoning steps (r) to generate descriptions.

Task Definition

Our benchmark is designed to evaluate three tasks:

- Description-to-Chart: Given a plot description

x, an algorithm generates its corresponding code c that creates a chart using the Matplotlib library1

Hunter, 2007.

- Raw Data-to-Chart: When provided with only a raw data table

d, the algorithm generates intermediate reasoning steps r that analyze the raw data and then generates a description d for the most suitable plot type based on the characteristics of the data.

- Code-to-Description: Given the code

c for a plot, the model generates a detailed description x of the plot.

Method

Our proposed algorithm is as below:

Experiments

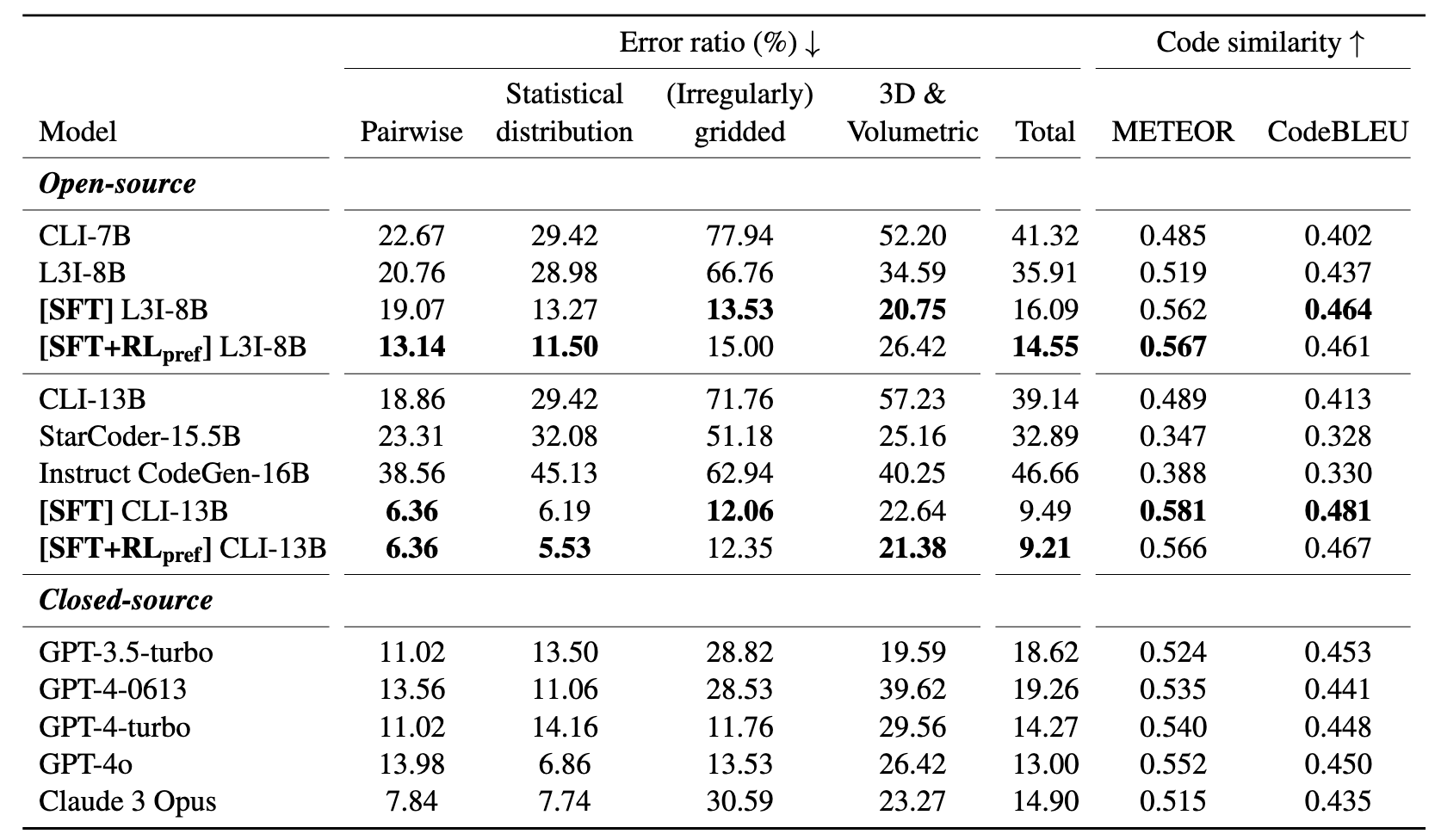

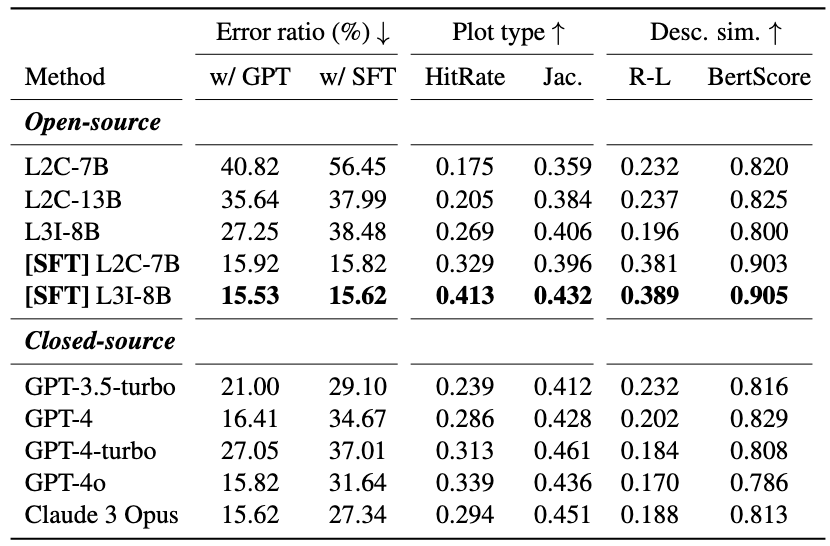

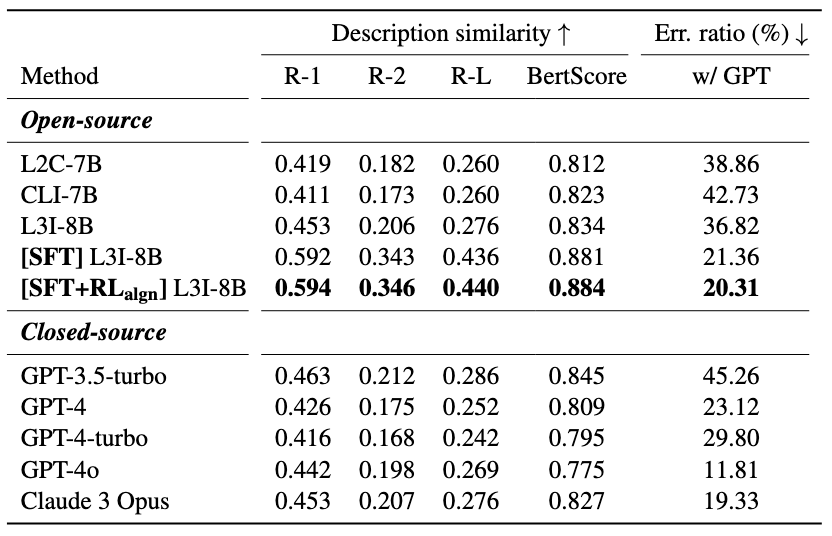

Our results demonstrate that our method outperforms state-of-the-art (SOTA) models.